Research

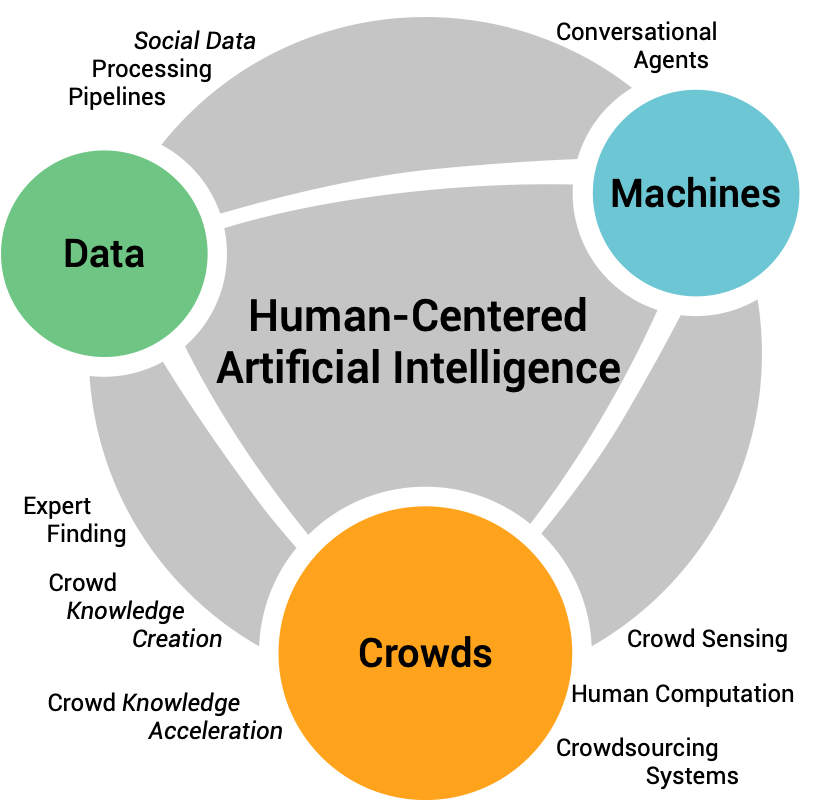

My work on Human-Centered Artificial Intelligence focuses on Crowd Computing. Crowd Computing is a computational paradigm for Human-Centred AI systems that advocates for the adoption of human intelligence at scale, to improve the performance of artificial intelligence systems in terms of: accuracy; adherence to people’s values, goals and needs; seamless interaction within complex social settings; and robustness and adaptability to changing, open-world environments.

My research effort is devoted to the creation of mathematical models and computational methods for Crowd Computing, to address both problems of analysis and design of this class of computational systems.

What is Crowd Computing?

Crowd Computing advocates for human-in-the-loop systems, where computational tasks are outsourced to a crowd of individuals, or to communities. Examples of relevant computational tasks include data creation, analysis, or interpretation.

Crowd Computing systems are designed to leverage both the scalability of computational machines as well as the power of human intelligence, to solve complex tasks that are currently beyond the capabilities of artificial intelligence algorithms, and that cannot be solved by a single person alone. For instance: image understanding, sarcasm detection, audio transcription, fake news identification, bias detection. CrowdComputing is Artificial Intelligence of the people, by the people, and for the people. Research in Crowd Computing acknowledges the relevance of human, organisational, and societal factors in the development of AI solutions by considering people as a critical elements in the design and use of systems, products and services with and for Artificial Intelligence.

In recent years, several successful examples have shown the potential and effectiveness of Crowd Computing: Wikipedia, the ESP game, Galaxy Zoo, online labor markets such as Amazon’s Mechanical Turk, the DARPA Balloon Challenge, FoldIt (a protein folding game), Google Maps, Duolingo, Waze, and others.

At the same time, the Crowd Computing paradigm enabled (and it is enabling) significant advances in many fields such as Information Retrieval and Machine Learning, with applications, e.g., to computer vision, natural language understanding. ImageNet is the quintessential example, as its availability is considered to be a significant enabler for the “deep learning revolution” of this decade.

Economic and Societal Relevance

Crowd Computing is a research field in Computer Science that is bound to become increasingly relevant in the near future, as testified by many compelling and converging trends.

The recently gained awareness of online content quality issues combined with the limitation of current artificial intelligence techniques (e.g., the “Fake News” and Russian meddling with election processing) has put Crowd Computing in the spotlight, highlighting its central role in providing reliable, transparent, and safe content exploration and consumption experience.

Several reports project the size of the crowd work market to grow between $16b and $47 billion by 2020. Gartner predicts that by 2020, more than 40% of data science tasks will be automated, resulting in increased productivity and broader usage by citizen data scientists; 20% of companies will dedicate workers to monitor and guide neural networks. In recent events, researchers from leading Web companies estimated at around 1 billion dollars the resources spent on a yearly bases by industries all around the world on human data collection.

Research Challenges

Despite the successful application of the Crowd Computing paradigm to a variety of domains, we still have a poor understanding of Crowd Computing from a design and engineering standpoint.

What is missing is a broad theoretical foundation for Crowd Computing, where successful abstractions and well-understood and tested design principles allow the design, creation, and deployment of robust, efficient, and scalable hybrid systems.

The involvement of humans in computation activities is a fundamental scientific challenge that requires obtaining the best from human abilities, while effectively embedding them into traditional computational processes and systems. Examples of the computer science challenges that are relevant for Crowd Computing systems, and that are currently the object of my research include Abstractions, models and methods for Efficient and Effective Crowd Computing, and strategies for sustainability.

Abstractions

Crowd Computing systems combine traditional computational and networking resources with human intelligence and contributions. The challenge is to define a level of abstraction able to minimally yet exhaustively capture the essential properties of electronic and human computers. Such abstractions should also allow designing and experimentally validating methods able to capture and measure (at scale) “functional” and “non-functional” properties of crowd computing tasks (e.g. granularity, complexity, clarity) human computational units (e.g. expertise, availability, trustworthiness, bias), and crowd computing systems (e.g. batch/stream processing).

Efficiency and Effectiveness

Humans are naturally slower than machines in terms of information processing; also, while machines deterministically compute, humans behavior may be unpredictable, ambiguous, possibly malicious. The challenge is to develop models and methods able to optimally tap human processing to minimize computational latency and cost while maximizing the quality, generalisability, and explainability of human processing outcomes.

Sustainability

Human participation is a crucial constraint for the success and long-term sustainability of Crowd Computing. The challenge is to design and experimentally test different computer-mediated engagement and retainment strategies, based on incentive schemes developed in fields such as behavioral economics and behavioral psychology.

My Research Goals

The long-term objective of my research is to contribute to a foundational theory for Crowd Computing. To this end, both mathematical and experimental research is needed.

Mathematical models and analysis enable reasoning on Crowd Computing systems’ properties and behavior, to enable rigorous design and testing. On the other hand, experimental work is essential to inform the development of such models and to test their validity.

I organized my research activities around 3 application domains, each addressing high-relevance and high-impact societal and industrial needs:

- Crowd Computing for Knowledge Generation, which focuses on the design and validation of Crowd Computing methods and tools for knowledge generation in the context of online communities (e.g., online crowd-work markets, Q&A systems) and content digitalization and access (e.g., cultural heritage and digital libraries);

- Crowd Computing for Urban Data Science, which addresses Crowd Computing methods and tools in the context of urban data and intelligent cities applications; and

- Enterprise Crowd Computing, which addresses Crowd Computing in the context of enterprise-class applications.

My short term plan targets new classes of Crowd Computing problems characterized by higher levels of task complexity, physical work environment, structured work organization, and financial/educational incentives.

This work addresses novel and relevant open research problems that, once tackled, will bring Crowd Computing into the level of maturity required by a discipline that holds such broad societal and economic impact.